2022 Research Review / DAY 1

AI Engineering in an Uncertain World

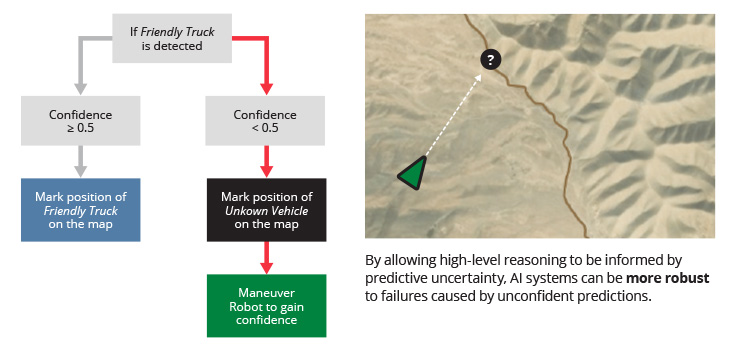

The DoD is increasingly seeking to deploy AI systems for mission-critical tasks. Modern AI systems most commonly employ machine learning (ML) models to make important, domain-relevant inferences. However, due in part to uncertainty, state-of-the-art ML models can produce inaccurate inferences in scenarios where humans would reasonably expect high accuracy. Furthermore, many commonly used models do not provide accurate estimates about when they are uncertain about their predictions. Consequently, AI system components downstream from an ML model, or humans using the model’s output to complete a task, must reason with incorrect inferences that they expect to be correct. Motivated by this gap, this project aims to accomplish the following objectives:

- Develop new techniques, and utilize existing ones, to give ML models the ability to express when they are likely to be wrong without drastically increasing the computational burden, requiring significantly more training data, or sacrificing accuracy.

- Develop techniques to detect the cause of uncertainty, learning algorithms that allow ML models to be improved after the cause of uncertainty is determined, and methods for reasoning in the presence of uncertainty without explicit retraining.

- Incorporate uncertainty modeling and methods to increase certainty in the ML models of government organizations.

This SEI research team has made real progress on its goal of detecting model uncertainty and mitigating its effects on the quality of model inference.

Dr. Eric HeimSenior Research Scientist

Our work seeks to realize three overarching benefits. First, ML models in DoD AI systems will be made more transparent, resulting in safer, more reliable use of AI in mission-critical applications.

Second, ML models will be more quickly and efficiently updated to adapt to dynamic changes in operational deployment environments. Third, we will make adoption of AI possible for missions where AI is currently deemed too unreliable or opaque to be used.

Quantifying Uncertainty: A Key Component for Informative and Robust AI Systems

Quantifying Uncertainty: A Key Component for Informative and Robust AI Systems

Our SEI team of Eric Heim, John Kirchenbauer, Jon Helland, and Jake Oaks brings expertise in the science and engineering of AI systems, human-computer interaction, enterprise-level infrastructures, and perspectives informed by a collective 50 years of experience leading and conducting projects for both government and industry. Our CMU collaborators Dr. Zachary Lipton and Dr. Aarti Singh bring expertise in monitoring and improving ML models in the presence of uncertainty.

Now in the second year of this three-year project, the team has worked together to make progress on their goal of detecting model uncertainty and mitigating its effects on the quality of model inference by using uncertainty to characterize types of errors and applying uncertainty quantification to object detection.

In Context

This FY2021–2023 project

- builds on DoD line-funded research, including graph algorithms and future architectures; big learning benchmarks; automated code generation for future-compatible high-performance graph libraries; data validation for large-scale analytics; and events, relationships, and script learning for situational awareness

- aligns with the CMU SEI technical objective to be timely so that the cadence of acquisition, delivery, and fielding is responsive to and anticipatory of the operational tempo of DoD warfighters and that the DoD is able to field these new software-enabled systems and their upgrades faster than our adversaries

- aligns with the DoD software strategy to accelerate the delivery and adoption of AI

Principal Investigator

Dr. Eric Heim

Senior Research Scientist—Machine Learning

SEI Collaborators

John Kirchenbauer

Machine Learning Engineer, AI Division

Jon Helland

Machine Learning Researcher, AI Division

Jacob Oaks

Associate Developer, AI Division

External Collaborators

Dr. Aarti Singh

Associate Professor, Machine Learning Department

Carnegie Mellon University

Dr. Zachary Lipton

Assistant Professor, Machine Learning Department

Carnegie Mellon University