2020 Year in Review

Game-Based Design Improves AI Support of Human Decision Making

Even the most advanced artificial intelligence (AI) system can fail if it does not meet the needs of its human operators. After observing several deployed AI decision support systems within federal and state governments and the Department of Defense (DoD), Rotem Guttman, a senior cybersecurity researcher at the SEI, noticed a common issue. “Humans make poor choices when deciding to rely on or ignore existing AI decision support systems,” he said. “In some cases, these systems are being wholly abandoned despite the fact that the underlying model was operating as designed.”

Perceiving that these systems failed to enable end users to intuitively understand and complete tasks, Guttman’s team worked to develop the Human-AI Decision Evaluation System (HADES), a test harness allowing researchers to collect human decision-making data on large sets of possible AI interfaces.

The team’s initial research indicated numerous factors that could be affecting AI system usability. They suspected that a failure to communicate model output meaningfully for the user could be contributing to poor decision making. The team identified more than 400 possible categories of interface design based on a set of candidate design elements. They needed a mechanism to determine which design practices would most affect decision making. Their goal was to develop a system for collecting data on real human decision making within a chosen domain and determine the appropriate best practices for AI system interface design for that domain.

To collect this data, a human would need to make the same type of decision over and over again with slightly different available information. “The problem is that humans aren’t machines and will very quickly tire of such a monotonous task,” noted Guttman.

The team realized that repeated decision making is a common characteristic of games, so they used game-based design techniques to reduce task fatigue and lack of interest. By opening up the HADES interface to game designers to allow integration with game environments, the system was able to collect players’ decision-making data in response to a variety of AI system advice.

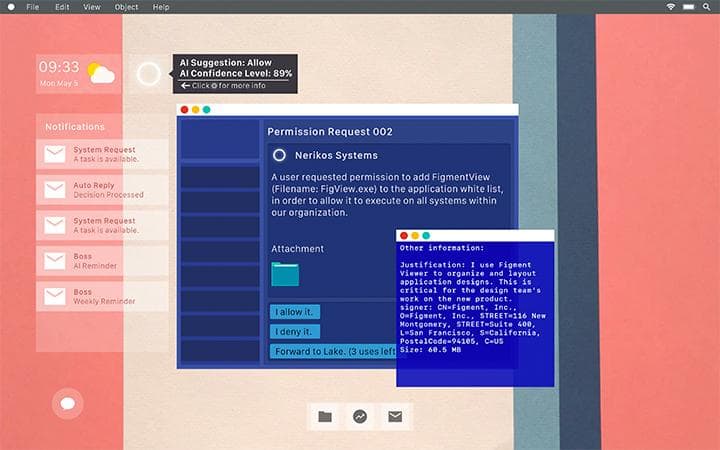

As a test case, the SEI’s collaborators at Carnegie Mellon University’s Entertainment Technology Center developed a game that put approximately 350 players in the role of a system administrator making decisions about what software should be added to a software safelist. Depending on their assigned experimental condition, players received the AI’s recommendation in a simple summary or more detailed output. The system tracked what information the users chose to review, how long they spent on each element, and whether their final decision was correct or incorrect.

One finding was that players trusted AI recommendations more when the stakes of the decision were higher, unless they were also given information about the AI’s degree of confidence. This kind of feedback on the quality of users’ decisions can be mapped back to the users’ recorded interactions with the system to determine what elements are promoting or preventing optimal decision making. Once a system design is selected and built, HADES can verify and validate that the system operates as intended when used by a human in the field.

HADES builds on previous SEI research that frames the entire AI system development process with a focus on the human. HADES can slot in an actual AI-enabled decision support system or simulate one, supporting multiple experimental designs to find the most intuitive one for end users prior to building the system. This key capability allows software acquirers to draft their system requirements with great accuracy. The same HADES harness can be connected to the delivered system to assure that it meets the specified design criteria, saving resources on both ends of the development cycle.

Humans make poor choices when deciding to rely on or ignore existing AI decision support systems. In some cases, these systems are being wholly abandoned despite the fact that the underlying model was operating as designed.

Rotem GuttmanSenior cybersecurity researcher, SEI CERT Division

For now, HADES remains a prototype, but it could open a path for building government and DoD AI decision support systems that truly take the human into account.

To play the game yourself, go to https://hades.cmusei.dev/.

RESEARCHERS

Mentioned in this Article

Human-AI Decision Evaluation System (HADES)

Entertainment Technology Center