xView 2 Challenge

Created July 2020

Assessing building damage after a disaster is a critical first step in disaster response. Unfortunately, this task can be slow, labor-intensive, and dangerous. The xView 2 Challenge explored how to assess disaster damage by using computer vision algorithms to analyze satellite imagery. The competition’s sponsor was the Department of Defense’s Defense Innovation Unit (DIU).

Competitors in the xView 2 Challenge applied machine learning techniques to evaluate satellite images of buildings before and after disasters, including wildfires, landslides, dam collapses, volcanic eruptions, earthquakes, tsunamis, storms, and floods. The algorithms automatically found buildings and then classified their damage in accordance with the Joint Damage Scale. By sponsoring this challenge, DIU hopes to speed recovery efforts after natural or human-caused disasters.

The algorithms developed for the xView 2 Challenge are being used to assess building damage for wildfires in the United States and Australia.

Assessing Building Damage: Slow, Difficult, and Dangerous

Disasters can strike at any place and any time. Effective disaster response depends on quickly and accurately assessing the situation in the affected area. Before responders can act, they need to know the location, cause, and severity of damage to homes, schools, hospitals, businesses, and other structures.

Currently, disaster response depends on in-person assessments of building damage within one to two days after a disaster occurs. But disasters also damage communication and transportation systems, making this process difficult and slow. Performing these assessments can be dangerous, as they require people on the ground to directly inspect damaged structures during or immediately after a disaster.

Satellites are playing an increasingly important role in helping emergency responders to assess the damage of a weather event and find victims in its aftermath. Most recently, satellites have tracked the devastation wrought by the California and Australia wildfires.

Damage assessments from overhead imagery could supplement or even replace in-person assessments. However, assessing damage is not an easy task. Although satellites provide unmatched overhead views of a disaster area, this raw imagery is not enough to guide response and recovery efforts. High-resolution images are needed to spot damaged and destroyed structures. But because disasters cover large areas, analysts must search through huge numbers of images to localize and classify building damage. This annotated imagery must then be summarized and communicated to the recovery team. The entire process is time-consuming, laborious, and prone to human error. On top of that, analysts can face storage, bandwidth, and memory challenges when processing satellite data and communicating their results.

Our Collaborators

- Defense Innovation Unit (DIU), Department of Defense (DoD)

- Carnegie Mellon University School of Computer Science

- Joint Artificial Intelligence Center (JAIC)

- National Aeronautics and Space Administration (NASA)

- National Geospatial-Intelligence Agency (NGA)

- California Department of Forestry and Fire Protection (CAL FIRE)

- California Air National Guard

- Federal Emergency Management Agency (FEMA)

- United States Geological Survey (USGS)

- California Governor's Office of Emergency Services (CalOES)

- National Security Innovation Network (NSIN)

- UC Berkeley AI Research Lab

- CrowdAI, Inc.

-

Australian Geospatial Intelligence Organisation (AGO)

The xView 2 Challenge: Using ML to Assess Building Damage from Satellite Imagery

The U.S. Department of Defense is often first on the scene after a disaster. It would like to automatically identify damaged structures in satellite imagery to assist with humanitarian assistance and disaster recovery (HADR) efforts. Applying computer vision algorithms to aerial images has enormous potential to speed up damage assessments and reduce the danger to human life.

The xView 2 Challenge

Recognizing an opportunity to address a critical disaster response need, the Defense Innovation Unit (DIU), together with SEI and several HADR organizations, launched the xView 2 Challenge for the computer vision community. The goal of the xView 2 challenge was to jump start the creation of accurate, efficient machine learning models that assess building damage from pre- and post-disaster satellite imagery. SEI built the baseline model for the challenge and helped to administer it.

Participants in the xView 2 Challenge competed to swiftly and accurately find and classify damaged buildings in high-resolution satellite images from a wide spectrum of natural disasters. When expert human analysts examine satellite imagery after a disaster, they apply contextual knowledge about the geography of the area and the weather or disaster conditions. xView 2 Challenge participants replicated this process by applying deep learning and other sophisticated computer vision techniques to a labeled dataset of satellite imagery. Their goal was to create the fastest and most accurate algorithms to detect and assess building damage.

The xBD Dataset

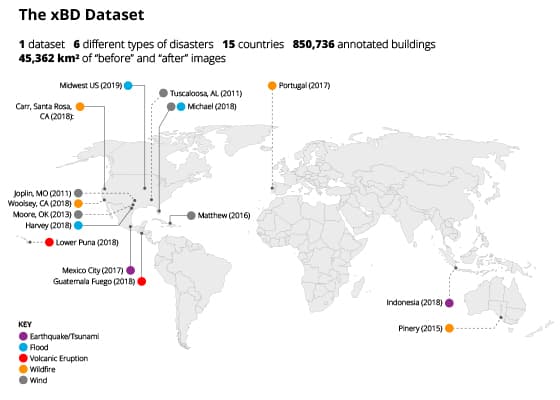

SEI researchers built a large-scale dataset of labeled satellite images for the xView 2 Challenge. All competitors used the xBD dataset, which was created specifically for the competition. It’s one of the biggest, most comprehensive, and highest quality public datasets of annotated, high-resolution satellite imagery.

The xBD dataset contains “before” and “after” satellite images from disasters around the world: wildfires, landslides, dam collapses, volcanic eruptions, earthquakes, tsunamis, storms, and floods. SEI worked with disaster response experts to create the Joint Damage Scale, an annotation scale that accurately represents real-world damage conditions. Every image is labeled with building outlines, damage levels, and satellite metadata. The dataset also includes bounding boxes and labels for environmental factors such as fire, water, and smoke.

The xBD dataset is available for public use under a Creative Commons license.

Looking Ahead

Machine learning models from the xView 2 Challenge identified damaged buildings for wildfires in Australia and California. These models will assist with HADR efforts now and in the future. Being able to quickly and accurately assess building damage will speed up disaster response and recovery. Applying deep learning to satellite imagery will not only help identify where crises are occurring, but also help responders save human lives.